Reflecting on the ever changing tech stack.

This month marks the anniversary of me being employed in technology based jobs for 18 years. It's been an incredible journey for myself and the tech.

18 years ago I started as a Technical support engineer at a mobile device management company. I sat down with a CRT monitor and an LSI desktop running Windows XP. The date was March of 2007.

My day to day was answering questions about our products. Using Webex to remote desktop when I needed to see what they were doing. If I was lucky this work desktop PC may have had the new LGA775 Intel Core 2 Duo processor.

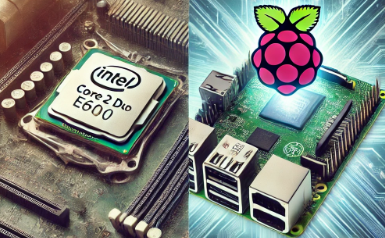

For a comparison here are the specs of the Intel Core 2 Duo E6600 and the Raspberry Pi 5:

Intel Core 2 Duo E6600 (2006)

- Architecture: 65nm Conroe (x86-64)

- Cores / Threads: 2C / 2T

- Base Clock: 2.4 GHz

- L2 Cache: 4MB shared

- TDP: 65W

- Memory Support: DDR2 (up to 8GB)

- Instruction Set: x86-64

Raspberry Pi 5 - Broadcom BCM2712 (2023)

- Architecture: 16nm ARM Cortex-A76 (ARMv8.2-A)

- Cores / Threads: 4C / 4T

- Base Clock: 2.4 GHz

- L2 Cache: 512KB per core

- L3 Cache: 2MB shared

- TDP: Estimated ~5-10W

- Memory Support: LPDDR4X-4267 (4GB/8GB models)

- Instruction Set: ARMv8-A (64-bit), includes modern SIMD instructions (NEON)

Which is Faster?

- The Pi 5’s ARM Cortex-A76 cores are far more efficient and modern than the Core 2 Duo’s aging Conroe cores.

- Single-core performance is somewhat comparable, but the quad-core design of the Pi 5 allows it to outperform the dual-core Core 2 Duo in multi-threaded tasks.

- The Pi 5 has a lower power draw (5-10W vs. 65W), making it significantly more efficient.

So you made it past a spec dump, way to go. Why would I compare those two? They are closer in some ways than you may think and believe it or not I still have both of these. This site is actually running on a pi5 inside of a few containers with an nvme hat.

Could I have done my job on a pi5 back in the day? Probably except I don't think our software supported Linux(yet). And the whole ARM vs X86-64 would be problematic.

What are the specs of your earliest personal or work machine?

As for current here are my two:

HomePC: Ryzen 5800x, 32GB DDR4 3600MHz, 990 pro 4TB SSD, RTX 3070ti

WorkLaptop: HPZ book 15", I7-1370P, 32GB DDR5 5200 MT/s, 512 NVme storage, Intel Iris XE graphics

Writing these down makes me wonder why did we move from MHz to MT/s ?

- Definition Differences:

- MHz measures the frequency of the clock signal, indicating how many cycles per second the clock operates. For example, a 1000 MHz clock runs at 1 billion cycles per second.

- MT/s, on the other hand, measures the number of data transfers that occur per second. In DDR memory, data is transferred on both the rising and falling edges of the clock signal, effectively doubling the number of transfers per clock cycle. Thus, a memory module rated at 1000 MT/s is capable of transferring data at a rate of 1 billion transfers per second.

- Clarity in Performance: Using MT/s provides a clearer understanding of the actual data throughput. Since DDR technology allows for two transfers per clock cycle, using MHz alone could be misleading. For example, a DDR3 memory rated at 1600 MT/s operates with a clock speed of 800 MHz, but the MT/s figure more accurately reflects its performance capabilities.

- Standardization: As memory technologies evolved, there was a need for a standardized way to communicate performance metrics. MT/s became a more universally accepted term in the industry, especially as data rates increased and the distinction between clock speed and effective data transfer rates became more significant.

In summary, the move from MHz to MT/s was driven by the need for a more accurate representation of data transfer rates, particularly in technologies that utilize multiple data transfers per clock cycle.